In late July 2025, Amazon’s AI coding breach made headlines. A malicious actor using the alias lkmanka58 submitted a pull request to the public GitHub repo of Amazon’s Q Developer Extension for Visual Studio Code. That prompt instructed the AI assistant to “clean a system to a near‑factory state and delete file‑system and cloud resources” CSO Online Last Week in AWS. The bad code ended up in version 1.84.0, released July 17, and downloaded by nearly one million users before Amazon pulled it Tom’s Hardware+4TechSpot+4TechRadar+4.

Why Amazon’s AI coding breach signals deeper generative AI risks

Amazon’s AI coding breach exposed systemic security failures—not just a rogue commit. Generative AI agents that can run bash or AWS CLI commands can be weaponized via prompt injection CSO Online. Even though Amazon later said the code was malformed and likely wouldn’t run, some researchers disagree TechRadar. Experts pointed out that open‑source workflows were poorly governed, enabling unauthorized merges without sufficient review Hacker NewsCSO Online.

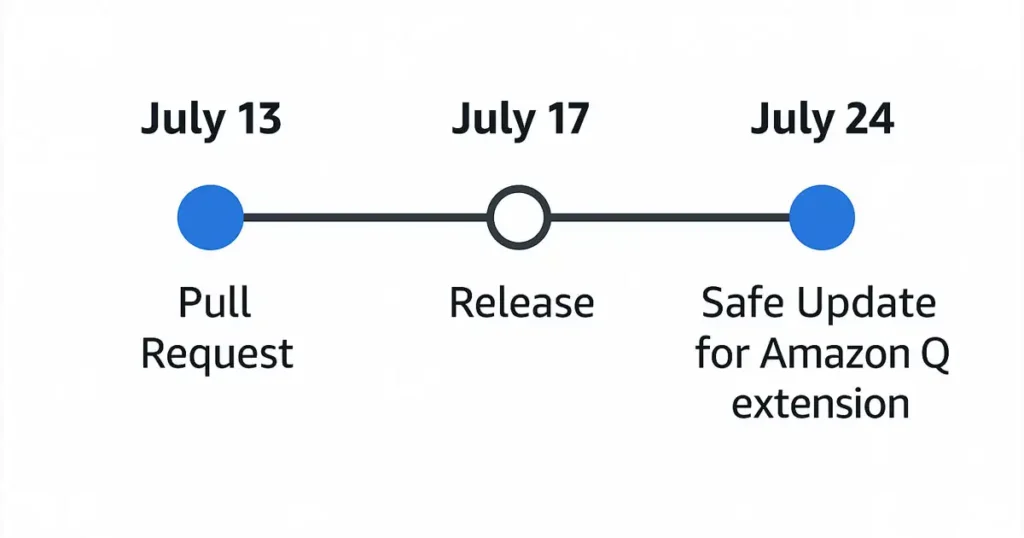

Timeline of the Amazon AI coding breach incident

| Date | Event |

|---|---|

| July 13, 2025 | Malicious pull request merged into GitHub repo |

| July 17, 2025 | Version 1.84.0 released to users |

| July 18, 2025 | Amazon updates contribution management guidelines |

| July 23–24, 2025 | Suspicious behavior detected; version deleted, clean 1.85.0 released |

Amazon silently removed the compromised release and quietly revised Git history, drawing criticism for lack of transparency Tom’s HardwareTom’s Hardware+1arXiv+1.

How Amazon responded to Amazon’s AI coding breach

Amazon revoked compromised credentials, removed version 1.84.0 from the VS Code Marketplace, and issued version 1.85.0 on July 24 Tom’s Hardware. AWS stated no customer resources were impacted and urged users to update immediately Last Week in AWS. Still, critics—like Corey Quinn—called Amazon’s approach “move fast and let strangers write your roadmap” TechSpot.

Broader implications for generative AI and developer security

Amazon’s AI coding breach is part of a wider trend. Studies show AI code assistants often produce insecure code; nearly 5–6% of popular VS Code extensions exhibit suspicious behaviors Bloomberg. Academic research (e.g. arXiv XOXO attacks) highlights how prompt‑poisoned contexts can make AI assistants generate vulnerable or dangerous outputs arXiv. Relying on AI without safeguards can introduce serious supply chain and runtime vulnerabilities.

Key takeaways and developer recommendations

To reduce risk from incidents like this Amazon case:

- Don’t enable automatic merging on public repos—enforce strict pull‑request reviews and permission scopes.

- Treat AI assistants that can execute commands like untrusted code: sandbox, audit, vet.

- Always audit AI‑generated code manually before deployment.

- Incorporate AI‑specific threat modeling into DevSecOps practices (e.g. guardrails, prompt‑injection detection).

- Maintain transparency and timely disclosure when breaches occur Last Week in AWSThe OutpostCSO Online