Contents

- Introduction: The Revolution of Open Source AI Video Generation

- The Democratization of Advanced Video Technology

- Top Open Source AI Video Generation Models of 2025

- Specialized Capabilities and Use Cases

- The Technical Infrastructure Behind Success

- Real-World Applications and Impact

- Future Outlook and Continuous Innovation

- Getting Started with Open Source AI Video Generation

- Conclusion

Introduction: The Revolution of Open Source AI Video Generation

The landscape of artificial intelligence has witnessed unprecedented growth, particularly in the realm of open source AI video generation. As we progress through 2025, creators, developers, and researchers are gaining access to sophisticated video generation capabilities that were once exclusive to tech giants with massive budgets.

Unlike proprietary solutions such as OpenAI’s Sora and Google’s Veo 2, open source AI video generation models offer unprecedented accessibility, customization, and cost-effectiveness. These models are transforming how we approach video content creation, enabling everything from cinematic storytelling to rapid social media clip production.

The Democratization of Advanced Video Technology

Breaking Down Barriers to Entry

Open source AI video generation has fundamentally changed the creative landscape by removing traditional barriers. Previously, high-quality video generation required expensive proprietary systems that were out of reach for most creators. Today’s open-source models have democratized access to cutting-edge technology, helping creators, developers, and researchers create stunning cinematic experiences without prohibitive costs.

This democratization represents more than just cost savings – it’s about empowering creativity and innovation across diverse communities. Independent filmmakers, content creators, educators, and researchers can now experiment with advanced open source AI video generation techniques that were previously available only to well-funded corporations.

Top Open Source AI Video Generation Models of 2025

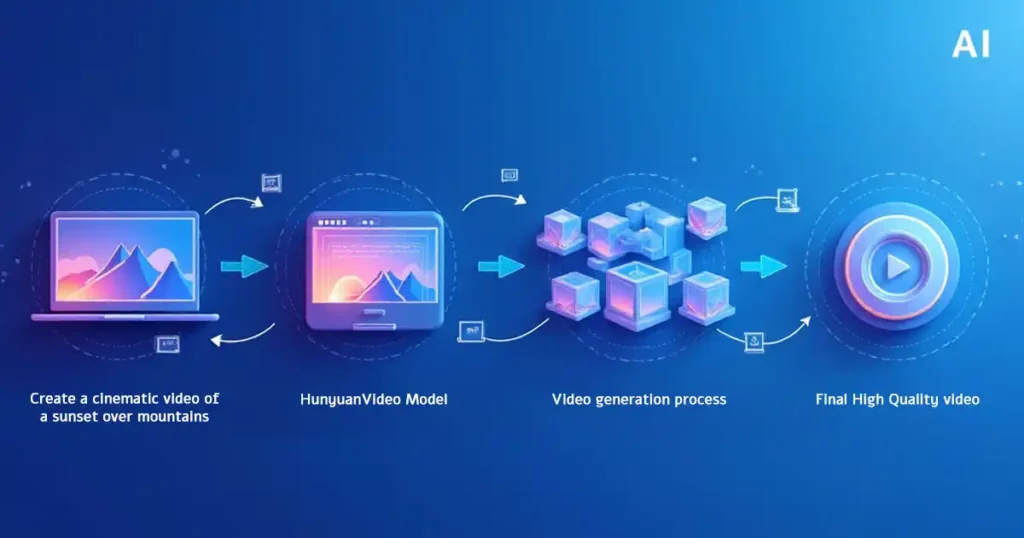

1. HunyuanVideo by Tencent: The Performance Leader

HunyuanVideo stands as a testament to the power of open source AI video generation. This 13-billion-parameter model has set new standards, with performance that beats state-of-the-art models like Runway Gen-3. The model excels in cinematic quality, motion accuracy, and ecosystem support, producing 15-second videos at 24 fps with 360 high-quality frames at 720p resolution.

What makes HunyuanVideo particularly impressive is its ability to maintain temporal consistency while delivering professional-grade output quality that rivals commercial solutions.

2. SkyReels V1: Cinematic Human Animation

Developed by Skywork AI, SkyReels V1 focuses specifically on cinematic-quality videos with realistic human portrayals. This open source AI video generation model features 33 distinct expressions and over 400 movement combinations, enabling expressive storytelling through 12-second videos at 24 fps with a resolution of 544×960.

The model’s specialization in human animation makes it particularly valuable for creators working on character-driven narratives and promotional content requiring authentic human representation.

3. Mochi 1: The Fine-Tuning Champion

Genmo’s Mochi 1 represents the largest openly released model at 10 billion parameters. This diffusion model has redefined open source AI video generation through high fidelity and exceptional prompt adherence. What sets Mochi 1 apart is its intuitive trainer that enables creators to develop LoRA fine-tunes using their own videos, offering unprecedented customization capabilities.

4. LTXVideo: Efficiency Meets Quality

Lightricks’ LTXVideo addresses one of the biggest challenges in open source AI video generation: accessibility. Optimized for speed and efficiency, this model runs smoothly on cost-effective GPUs like the NVIDIA RTX A6000, requiring as little as 12GB VRAM. It’s perfect for rapid prototyping, social media clips, and real-time previews where speed and efficiency are paramount.

Specialized Capabilities and Use Cases

Multi-Modal Excellence

Open source AI video generation has evolved beyond simple text-to-video conversion. Models like Wan 2.1 by Alibaba showcase multi-tasking capabilities, supporting text-to-video, image-to-video, video editing, text-to-image, and even video-to-audio processing. This 14-billion-parameter model also offers multilingual capabilities, processing both English and Chinese fluently.

Music-Driven Video Creation

Allegro by RhymesAI specializes in music-driven open source AI video generation, creating videos that synchronize with music tracks. This model interprets audio elements such as rhythm, tempo, and emotional tone, translating them into compelling visual narratives.

The Technical Infrastructure Behind Success

Cloud Computing Solutions

The success of open source AI video generation relies heavily on accessible cloud computing infrastructure. Providers like Hyperstack offer high-end GPUs including NVIDIA A100, H100 PCIe, and H100 SXM configurations, making it possible for creators to access the computational power needed for these demanding models.

Community-Driven Development

The Hugging Face Diffusers library serves as the backbone for many open source AI video generation implementations. This community-driven platform provides inference capabilities, fine-tuning tools, and crucial optimization techniques that make these models more accessible to a broader audience.

Real-World Applications and Impact

Content Creation Revolution

Open source AI video generation is revolutionizing content creation across multiple industries. Marketing agencies use these tools for rapid prototype development, while educators create engaging visual content for online learning platforms. Independent filmmakers experiment with concepts before committing to expensive production processes.

Research and Development

Academic institutions and research organizations leverage open source AI video generation models to explore new frontiers in computer vision, human-computer interaction, and creative AI applications. The open-source nature enables collaborative research and rapid iteration.

Future Outlook and Continuous Innovation

The trajectory of open source AI video generation points toward continued improvement in output quality and model capabilities. Anticipated developments include enhanced control mechanisms, improved temporal consistency, and more efficient resource utilization.

The community-driven nature of these projects ensures continuous innovation, with contributions from researchers, developers, and creators worldwide. This collaborative approach accelerates advancement while maintaining the democratic principles that make these tools accessible.

Getting Started with Open Source AI Video Generation

For those interested in exploring open source AI video generation, the journey begins with understanding hardware requirements and choosing the right model for specific needs. Beginners might start with more accessible models like LTXVideo, while experienced users can explore the advanced capabilities of HunyuanVideo or Mochi 1.

The key is to experiment, learn from the community, and contribute back to the ecosystem that makes open source AI video generation possible.

Conclusion

Open source AI video generation represents more than technological advancement – it embodies the democratization of creative tools and the power of collaborative innovation. As these models continue to evolve, they promise to unlock new forms of creative expression while maintaining the accessibility and transparency that define the open-source movement.

The future of video creation is open, accessible, and limited only by our imagination.

Pingback: Why Local AI Video Generation is So Challenging: The Technical Reality - TokenBae