OpenAI has made a significant move in the AI world with the introduction of its new ‘open-weight’ language models, gpt-oss-120b and gpt-oss-20b. This release, a first of its kind from OpenAI since GPT-2, aims to make advanced AI more accessible to developers and businesses. The models are not truly ‘open source’ in the traditional sense, but they are ‘open weight,’ meaning the model parameters are freely available for download and can be customized by developers. This provides a balance of flexibility and safety, as OpenAI has retained control over the core workings while allowing for broad use under the permissive Apache 2.0 license.

While not officially confirmed, many suspect this is the mysterious Horizon Alpha model previously seen on Open Router. This release marks a significant moment for the AI community, as OpenAI has not only open-sourced the model but has also released its weights, making it ‘open-weight’.

Contents

What are the New OpenAI Open Source Models?

OpenAI released two new models:

- gpt-oss-120b: The larger of the two, this model boasts 117 billion total parameters and is designed to run efficiently on a single 80 GB GPU. It achieves a performance that is near-parity with OpenAI’s proprietary o4-mini model on core reasoning benchmarks.

- gpt-oss-20b: A more compact model with 21 billion total parameters, this one is built for efficiency. It can run on devices with just 16 GB of memory, making it ideal for local inference on consumer hardware and edge devices. It performs comparably to OpenAI’s proprietary o3-mini model on common benchmarks.

Both models leverage a Mixture of Experts (MoE) architecture, which helps them deliver strong performance with low latency and at a reduced cost. This architecture allows the models to activate only a subset of their parameters for each task, making them more efficient than traditional dense models.

Why Open-Source AI Models Matters?

The benefits of open-source models like GPTOSS are numerous and compelling, particularly for developers, enterprises, and everyday users:

- Cost-Effectiveness: Open-source models are significantly cheaper than Frontier closed-source models.

- Flexibility and Customization: Users can fine-tune these models for their specific use cases and provide them with additional knowledge. This allows for unparalleled adaptability.

- Permissive Licensing: GPTOSS is released under an Apache 2.0 license, a very permissive and standard licensing model, granting broad usage rights.

- Enhanced Security and Privacy: For enterprise organizations, open-source models provide an extra layer of security and privacy by enabling on-premises or self-hosted deployment on their own infrastructure.

- Local Inference & Accessibility: These models are ideal for on-device use cases, local inference, or rapid iteration without costly infrastructure. The speaker even recommends downloading them to have access to the world’s knowledge offline.

- Adjustable Reasoning: Uniquely, users can adjust the amount of reasoning the models execute during their “chain of thought” process. This means you can set it low for quick answers or high for complex math, science, coding, and reasoning problems.

The Architecture and Efficiency of GPTOSS

OpenAI has provided unprecedented insight into how these models were built, a rarity compared to their closed-source counterparts.

- Mixture of Experts (MoE) Architecture: Each model is a transformer utilizing a Mixture of Experts (MoE) architecture, which helps reduce the number of active parameters needed to process input.

- The 120 billion parameter version activates only 5 billion parameters per token, demonstrating remarkable efficiency.

- The 20 billion parameter version activates 3.6 billion parameters.

- Advanced Attention & Encoding:

- They use alternating dense and locally branded sparse attention patterns, similar to GPT3.

- For inference and memory efficiency, they incorporate group multi-query attention with a group size of eight.

- RoPE (Rotary Positional Embedding) is used for positional encoding.

- Extensive Context Lengths: Open AI open source models natively support context lengths of up to 128,000 tokens, with potential for further increase through tuning.

- Training and Data:

- The models were trained on a high-quality, text-only dataset with a strong focus on STEM (Science, Technology, Engineering, and Math), coding, and general knowledge.

- They utilized a superset of the tokenizer used for 04 Mini and GPT4o (which are also open-sourced).

- Training involved a mix of reinforcement learning and techniques informed by OpenAI’s most advanced internal models, including 03 and other frontier systems. A particular emphasis was placed on reasoning efficiency and real-world usability.

- Post-training included a supervised fine-tuning stage and a high-compute Reinforcement Learning (RL) stage, similar to O4 Mini. The objective was to align the models with specific requirements and teach them chain of thought reasoning and tool use before generating answers.

Performance That Rivals Frontier Models

The benchmarks for GPTOSS are truly impressive, demonstrating capabilities that put it on par with, and sometimes even surpass, leading closed-source models.

- Core Reasoning: The GPTOSS 120B model achieves near parity with OpenAI 04 Mini on core reasoning benchmarks.

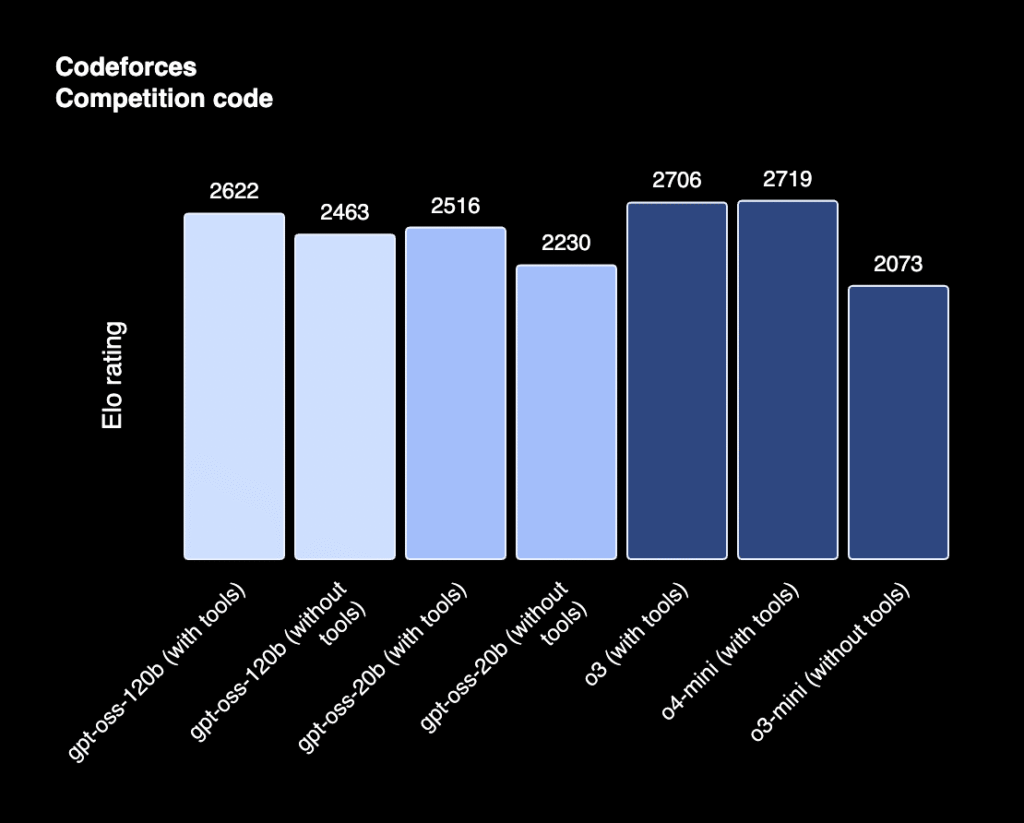

- Codeforces Competition:

- The 120 billion parameter version with tools scored 2622, extremely comparable to 03 with tools (2706).

- The 20 billion parameter version with tools scored 2516, highly comparable given its size.

- These scores are strong enough to beat most humans globally at coding.

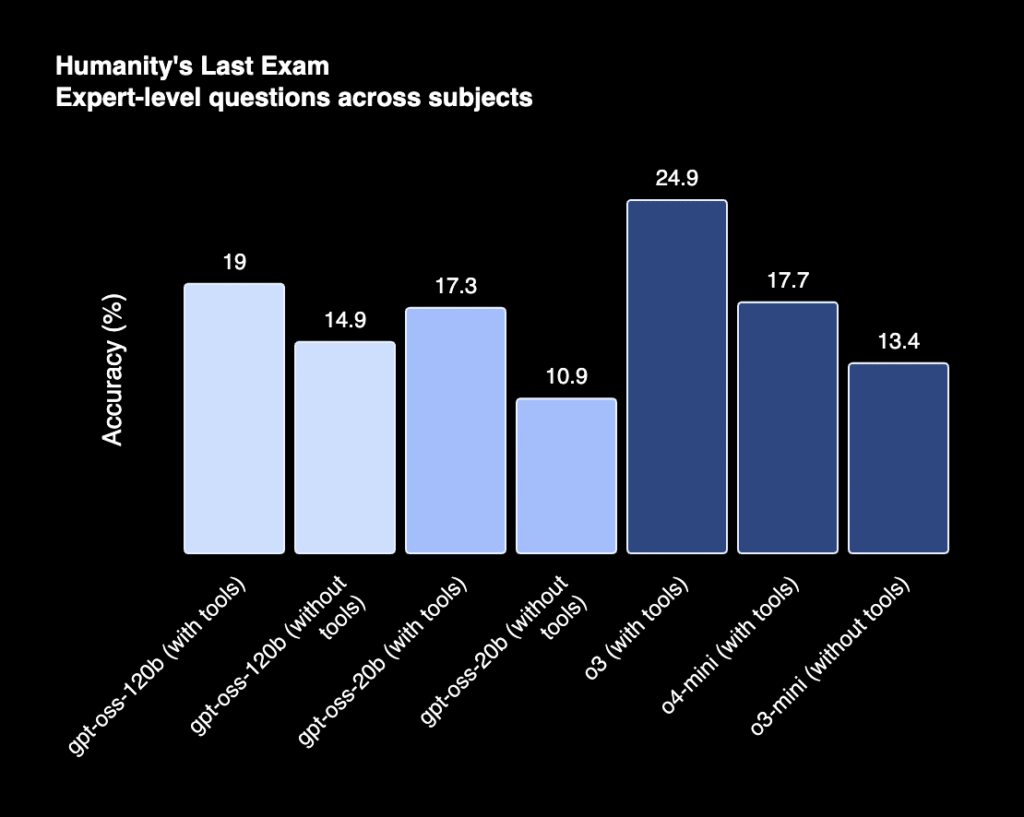

- Humanity’s Last Exam (Expert-Level Questions):

- The 120 billion parameter version with tools scored 19%, compared to 03 with tools at 24.9%.

- Notably, the open-source 120B version with tools outperforms 04 Mini with tools and 03 Mini without tools.

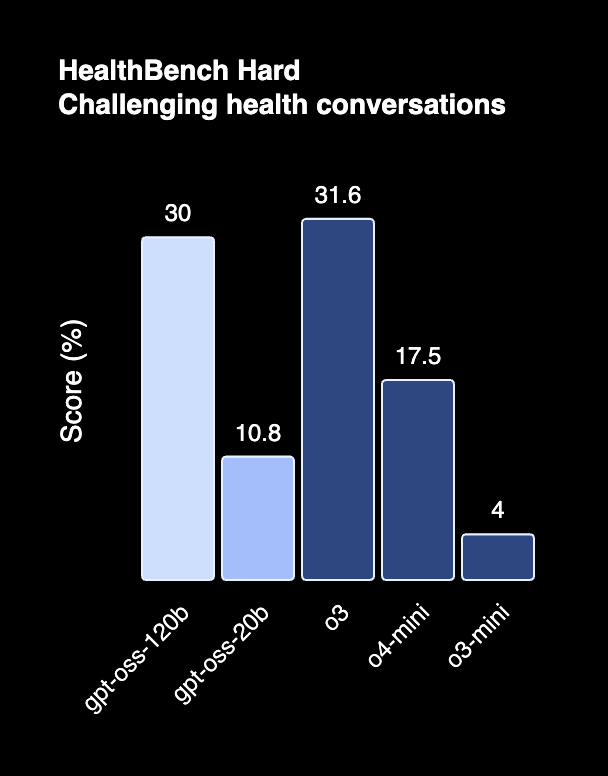

- Healthbench (Medical Benchmarks):

- For realistic health conversations, the 120B scored 57.6, very close to 03’s 59.8.

- On challenging health conversations, it scored 30 against 03’s 31.6.

- The 20 billion parameter version achieved an astounding 96% on Amy 2024 and even beat the 120 billion parameter version on Amy 2025.

- GPQA Diamond (PhD-Level Science):

- 120B scored 80.1, compared to 03’s 83.3.

- The 20B version followed closely at 71.5.

- MMLU: The 120B achieved 90% and the 20B achieved 85.3%, both very comparable to 03.

- TaBench (Function Calling): The 120B scored 67.8, compared to 03’s 70.4, showing very respectable results.

Accessible Deployment: Running on Your Hardware

One of the most exciting aspects is the hardware efficiency of these models:

- 120 Billion Parameter Version: Can run efficiently on a single 80 GB GPU. This means it can even run on high-end consumer machines like a Mac with 96 GB unified memory or a PC with two A6000s.

- 20 Billion Parameter Version: Designed to run on edge devices with just 16 GB of memory, making it perfect for on-device applications.

Commitment to Safety and Responsible AI

OpenAI has also shared insights into the safety aspects of GPTOSS:

- Chain of Thought Transparency: They did not apply direct supervision on the chain of thought for either OSS model. This allows developers to see the raw reasoning process, which can be helpful for detecting misbehavior. However, developers are advised not to directly show raw chain of thought to users in applications, as it may contain hallucinated or harmful content; instead, it should be summarized and filtered.

- Harmful Data Filtering: During pre-training, harmful data related to chemical, biological, radiological, and nuclear (CBRN) content was filtered out.

- Robustness Against Malicious Fine-tuning: OpenAI directly assessed the risks of adversaries fine-tuning the open-source models for malicious purposes, specifically in biology and cybersecurity. Their testing indicated that even with extensive and robust fine-tuning, these maliciously fine-tuned models were unable to reach high capability levels for harmful applications, according to their preparedness framework.

- Red Teaming Challenge: To further enhance safety, OpenAI is hosting a $500,000 challenge for red teamers to identify safety issues within these models.

Get Started Today!

These models are compatible with the Responses API and are designed to be used within AI frameworks like CrewAI. You can download GPTOSS and other open-source models for local use.

This release is set to change the game for enterprises and individual developers alike, providing powerful, flexible, and accessible AI capabilities.

Pingback: OpenAI Unveils The Game Changer: GPT 5 Features & Overview - TokenBae