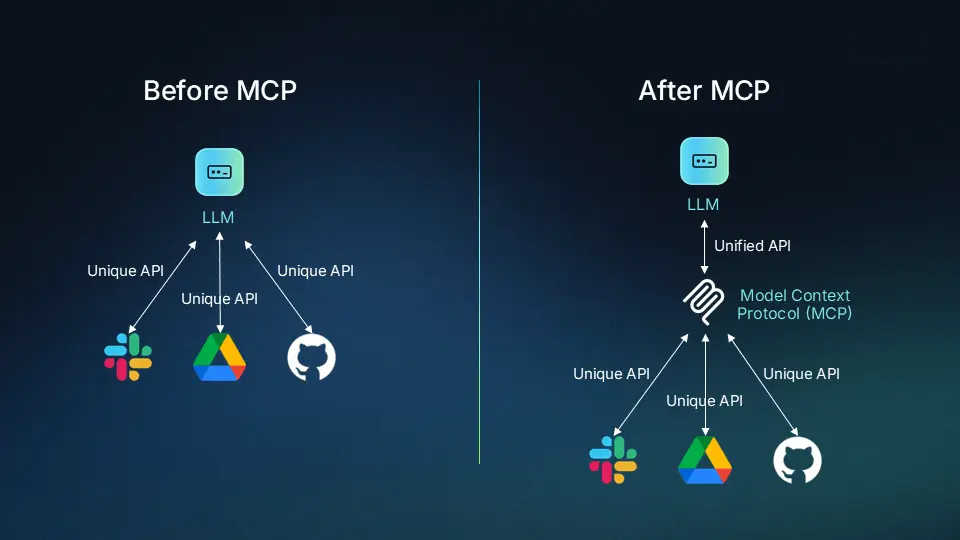

In 2025, the landscape of application development has undergone a fundamental shift, largely thanks to the advent of the Model Context Protocol (MCP). This innovative protocol is finally allowing us to move beyond the patchwork of custom integrations, bespoke adapters, and complex glue code that has long plagued the integration of AI models with various systems.

Contents

What is Model Context Protocol? The Universal Interface for AI Agents

MCP stands as a universal interface specification for AI elements, essentially acting as a ‘USB-C for AI agents‘. It provides a standardized way for AI models to connect with diverse platforms, including co-repositories, communication platforms, and mapping services, eliminating the need for custom integrations every time an AI model needs to interact with an API. This means no more bespoke adapters, no more SDK bingo.

How MCP Works Under the Hood

The process begins when a user sends a prompt to an MCP client. This client, which acts as a middleman, sits inside the MCP host. The Model Context Protocol host is where the main application runs, connecting to all the necessary tools and data for the AI to perform its tasks.

Once the MCP client receives the user’s prompt (for example, “do a price comparison on organic chicken breast and have Google Maps send me to the cheapest grocery store on my way home from the gym”), it determines the user’s intent. It then selects the appropriate tools via one or more MCP servers. The Model Context Protocol servers are crucial as they house all the tools and handle the back-and-forth communication required to get the answers the AI needs. After calling any necessary external APIs and processing the results, the Model Context Protocol system sends everything back to the user seamlessly, with all these complex operations happening behind the scenes.

The Core Components of an MCP Server

An MCP server connects to external systems and offers three primary components:

- Tools: These are functions that the AI can call.

- Resources: This is where all the data the AI needs originates.

- Prompts: These are predefined, preset instructions that help guide the AI’s behaviour.

Real-World Use Cases Demonstrating MCP’s Impact

MCP’s potential is best illustrated through its practical applications:

1. Revolutionising GitHub Workflow with the GitHub MCP Server

For developers and teams managing repositories, the GitHub MCP server is a game-changer. By connecting an AI agent directly to the GitHub API via Model Context Protocol, the AI can automatically handle a myriad of tasks, including managing repositories, issues, pull requests, branches, and releases, while also taking care of authentication and error handling.

Instead of manual, time-consuming reviews, the AI can:

- Automatically review pull requests and flag potential problems.

- Spot bugs earlier by analyzing code changes.

- Enforce consistent coding standards across the team.

- Sort and prioritize incoming issues, ensuring the team focuses on the most important tasks.

- Keep dependencies up to date without manual intervention.

- Scan for security vulnerabilities and provide early alerts, preventing nasty surprises.

This integration allows teams to reclaim valuable developer time that would otherwise be spent on routine maintenance. The benefits include focusing on actual development, fewer (or even no) bugs slipping through, and cleaner, more consistent code-bases.

2. Automating Customer Support

MCP also provides an elegant solution for companies dealing with high volumes of customer support queries, such as password resets, billing questions, bug reports, and technical troubleshooting. Traditionally, this requires a large support team to manually check databases, logs, and systems.

With MCP, an AI agent can be connected to all the tools a support team typically uses, such as:

- Customer databases for user information.

- Billing systems for payment checks.

- Server logs for issue analysis.

- Knowledge bases for help articles.

- Ticketing systems for creating or updating support tickets.

Because MCP provides a standardized way for the AI to communicate with all these tools, companies avoid building custom connections for each system. This allows the AI to access all necessary data, call the right functions, and handle most support cases automatically.

For example, if a customer states they can’t log in because their subscription expired despite recent payment, the AI, using MCP, can:

- Look up the customer’s account.

- Check billing records.

- Verify payment status.

- Update the subscription if needed.

- Reply to the customer with a resolution, such as “I’ve confirmed your payment and reactivated your account”.

This results in faster support for customers, significantly less workload for human support teams, fewer mistakes (as the AI checks all systems consistently), and easier scalability as the company grows. Essentially, MCP enables AI to act like a real support agent but faster, 24/7, and without the need for custom code for every system.

The Game-Changer

As these real-world examples demonstrate, MCP is a significant advancement. It allows teams to build applications that integrate AI agents into existing ecosystems efficiently and effectively, elevating their capabilities to a new level.

Pingback: A2A Protocol vs MCP: AI Agent Communication Protocols Battle for Standards - TokenBae